Inference API Deployment#

Launches a REST API which takes data and serves inferences based on the loaded model (defined in deployment_specs. model_specs)

By default launches a grafana instance to monitor the model performance.

class InferenceAPIDeployment

Kuberenetes Objects:

ConfigMap

Deployment

Service

HorizontalPodAutoscaler

Fields:

type: inferenceAPIDeploymentname: stringrequired - unique name of deployment

templatestringdefault:

src/octaipipe/configs/cloud_deployment/inference_api_deployment_template.yml

kubernetes_namespace string

default:

default

monitor_modelbooldefault:

TrueSets up a Grafana Dashboard which monitors model performance.

deployment_specsdictSee placeholders

Placeholders (deployment_specs or env variables):

deployment_namestringdefault = deployment’s name

api_http_portintdefault =

8222

MONITOR_DB_URLstringdefault = monitor DB client URL

deployment_idstringdefault = deployment id

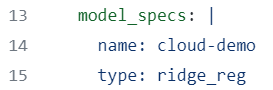

model_specsmulti-line scalar valuerequired

e.g.: